The representation of space in the hippocampal formation has been studied extensively over the past four decades, culminating in the 2014 Nobel Prize in Physiology or Medicine. However, an important question remained unresolved: Is the role of this brain structure in spatial processing restricted to mammals, or can we find its origins in other classes of vertebrates?

In this project we study two species of birds: Japanese quail and barn owl. While the quail is a ground-dwelling bird and an efficient forager, the barn owl is a nocturnal predator, tending to stand on high branches and scan the surrounding from afar, searching after distal visual and auditory cues.

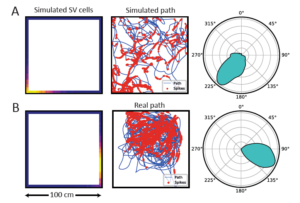

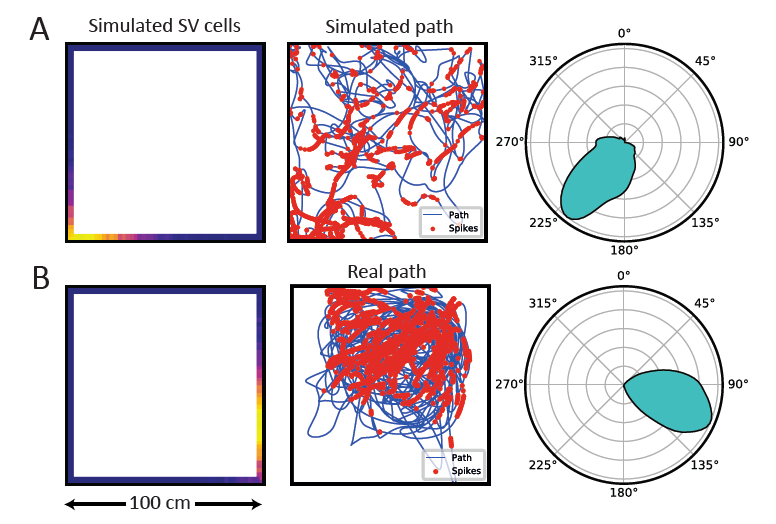

Techniques for recording from freely behaving animals are used to measure nerve cell activity of the birds foraging on the ground in 2D, or searching from afar, or flying in 3D space. A variety of avian behavioral tasks are devised in order to search for place cells, spatial-view cells and other types of space-coding cells.

ocess, known as visual search, is highly studied in humans and animals. What attracts the visual gaze and how barn owls search for interesting stimuli are the main questions asked in this project. We combine kinematic measurements of head movements with tracking of gaze point. Experiments are performed in spontaneously behaving owls as well as with trained owls performing a controlled visual task on a computer screen. To measure the kinematics we attach infra-red reflectors to the owl’s head and track their position using a Vicon system. To follow in real-time the point of gaze of the owl a wireless miniature video camera is mounted on the head. Using these techniques, on-going experiments address the following topics: combining visual and auditory information for saliency mapping, detection of camouflaged objects, pop-out perception and the role of active head motions in visual perception. Results from the behavioral experiments are combined with results from physiological experiments conducted in our lab to gain an understanding of visual search mechanisms in barn owls.

ocess, known as visual search, is highly studied in humans and animals. What attracts the visual gaze and how barn owls search for interesting stimuli are the main questions asked in this project. We combine kinematic measurements of head movements with tracking of gaze point. Experiments are performed in spontaneously behaving owls as well as with trained owls performing a controlled visual task on a computer screen. To measure the kinematics we attach infra-red reflectors to the owl’s head and track their position using a Vicon system. To follow in real-time the point of gaze of the owl a wireless miniature video camera is mounted on the head. Using these techniques, on-going experiments address the following topics: combining visual and auditory information for saliency mapping, detection of camouflaged objects, pop-out perception and the role of active head motions in visual perception. Results from the behavioral experiments are combined with results from physiological experiments conducted in our lab to gain an understanding of visual search mechanisms in barn owls.

The saliency of visual objects is based on the center to background contrast. Particularly objects differing in one feature from the background may be perceived as more salient. It is not clear to what extent this so called ‘‘pop-out’’ effect observed in humans and primates governs saliency perception in non-primates as well. In this study we searched for neural correlates of pop-out perception in neurons located in the optic tectum of the barn owl.

The saliency of visual objects is based on the center to background contrast. Particularly objects differing in one feature from the background may be perceived as more salient. It is not clear to what extent this so called ‘‘pop-out’’ effect observed in humans and primates governs saliency perception in non-primates as well. In this study we searched for neural correlates of pop-out perception in neurons located in the optic tectum of the barn owl.